Paper Explainer: Assessing Astrophysical Uncertainties in Direct Detection with Galaxy Simulations

/This is a description of a recent paper of mine, with Jonathan Sloane (a graduate student in the astro group here at Rutgers), Alyson Brooks (also a professor at Rutgers), and Fabio Governato (faculty at U Washington).

Alyson and Fabio are members of the N-Body Shop, which is a collaboration of astronomers who write and use computer codes to simulate galaxies (among other things). They start with the initial conditions of the Universe (from shortly after the Big Bang) when the Universe was smooth and pretty featureless. The only thing around were small primordial fluctuations making some small regions a tiny bit more dense than other regions. For theoretical physicists, the source and spectrum of these fluctuations are a source of a great deal of interest, since they give us some clues to the era of inflationary physics, but for these purposes the fluctuations are inputs.

Then, the simulation just let’s time move forward, and let the matter in the Universe evolve with time under the effects of the laws of Nature, in particular gravity. What they find is that you form galaxies, galaxies that look a lot like the galaxies we see around us.

The cutting edge stuff that Alyson and Fabio are working on now is that their simulations contain normal matter: baryons, the stuff we are all made out of. What was done previously was dark matter-only simulations. This is fine, as far as it goes, since the dark matter massively outmasses the regular matter in the Universe. Since most of a galaxy is made of dark matter, if you simulate the motion of the dark matter over time, the baryons mostly just come along for the ride. And simulating baryons is hard: dark matter is collisionless (or nearly so), so all you need to do is track the gravitational interactions. Baryons do annoying things like interact with radiation, and cool, and form stars which explode and so on.

Keeping track of these baryonic effects takes up more computing time. And these simulations are computational intensive: like a million hours of CPU time (and 6 months of clock time) to simulate a galaxy. So where dark matter-only simulations can create a whole suite of simulations, with many dark matter-only galaxies that span a wide range of masses and behaviors, baryonic simulations have only a small number. Specifically, we had 4 Milky Way-like galaxies to work with. They look like this (click on the figure to scroll through)

So what were we interested in doing with these galaxies?

Well, my interest was in looking at the velocity distribution of dark matter around where the Earth and Sun sit in the Milky Way. When we look for dark matter using direct detection detection experiments, we’re looking for dark matter particles to smash into a nuclei with enough force to impart a noticeable kick to the nuclei, which we can then pick up in the detector. What this requires is dark matter with enough kinetic energy to give a big enough kick: so either very fast dark matter, or very heavy dark matter. What counts as fast enough or heavy enough depends on how you built your detector (which sets what a big enough kick is for your experiment).

Now, we know that since dark matter is all around us, and we’re sitting about 8 kpc from the center of the Milky Way, dark matter near us needs to be moving at about 200 km/s (which is pretty ridiculously fast when you think about it). That just comes from basic arguments about how orbits work.

But we don’t know the velocity distribution very well at all: how many particles are moving faster than that 200 km/s value, and how many are moving slower? Is there velocity structure? That is, the visible matter in a galaxy rotates, does the dark matter? Is there something like a disk of dark matter like there is for stars (which would cause changes in the local velocity structure)? All of these are possible, and the only way to know the answer is directly measure the speed of dark matter near us (which we can’t do until we discover dark matter, which gets into a bit of a chicken or egg thing here) or simulate it.

We have a guess, called the Standard Halo Model, which is a guess that predates any detailed simulation of galaxies, and is actually known to be wrong in some important ways. Due to inertia, this model of velocity distributions of dark matter is used in most dark matter direct detection experiments, which is fine as far as it goes (no one is going to not find dark matter because they used the wrong halo model), but can make us make mistakes when we compare between different experiments (which are sensitive to dark matter moving at different speeds) or between direct detection and other types of experiment (like the LHC).

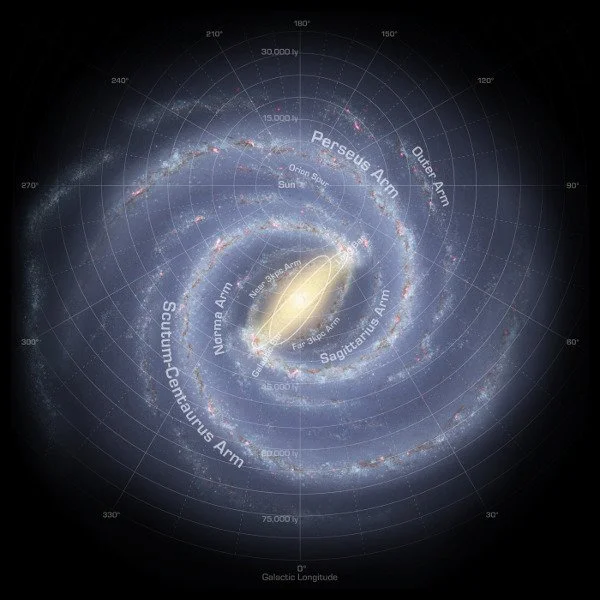

Map of the Milky Way. Robert Hurt (Spitzer/NASA)

People have of course simulated dark matter galaxies like the Milky Way and looked at what they tell us about the velocity of dark matter. However, even though dark matter outmasses regular baryons overall, we live in a special place: on a planet, near a star, deep inside a galaxy (don’t let Hitchhiker’s Guide to the Galaxy fool you, we live in a very fashionable part of the Milky Way). We live in a place with a lot of baryons, more than there is dark matter. So ignoring the motion and gravity of baryons for the part of the Galaxy where the Earth is could give you a very misleading picture of how dark matter is moving, and therefore how to interpret the results of our searches for it.

So, using the four simulated galaxies that Alyson had which are approximate the mass of the Milky Way, her grad student Jonathan could look inside them and find all the dark matter that would be near where the Earth is, if the Earth was in one of those galaxies. Then, he could figure out the velocity distribution of those near-Earth dark matter particles.

Now keep in mind that in our simulations, a “dark matter particle” is a particle of about $10^4$ Solar masses. And our resolution is about 170 parsecs (meaning we can’t keep track of physics on scales smaller than that). And that’s actually incredibly impressive, that’s the lowest resolution I’m aware of for simulations with baryons. This is just a limitation of the computing; we clearly can’t keep track of individual sub-nuclear scale dark matter particles, and the simulation has to discretize the problem.

So we can’t just look right at where we would put the Earth and measure the dark matter there, we instead have to average over a region around where the Earth might be. In our case we take a donut-shaped region centered on the radius of the Sun from the center of the Milky Way (8 kpc), and 1 kpc in radius. The resulting distribution of dark matter speeds looks like this for our four galaxies.

Velocity distribution of dark matter in the Galactic reference frame for the four simulated galaxies in 1601.05402. Red is dark matter-only simulations, Blue are simulations with baryons, right column is ratio of baryonic simulation to dark matter.

The left-most column are the velocity distributions of the versions of our galaxies that don’t have baryonic physics included (dark matter-only). We compare to the Standard Halo Model (black line), and another estimate of the dark matter speed called the Mao model (green). Mao does pretty well fitting dark matter galaxies, which is not surprising since Mao et al used dark matter simulations to build their model. The Standard Halo Model massively overpredicts how much high-speed dark matter there should be in these models.

Then we move to the center column, which has the velocity distributions from simulations with baryonic physics included. You can see that now the Mao model isn’t a great fit, and the Standard Halo Model is a bit better. But importantly, there is less dark matter at high speeds than the Standard Halo Model predicts. That’s important, because it is exactly those high-speed dark matter particles that are ones that the dark matter direct detection experiments can see: they have enough kinetic energy to give appreciable kicks.

So then Jonathan took these results and took them from the Galactic frame of reference to the Earth frame. That is, since the Earth moves through the dark matter halo around us, we are running into a wind of dark matter, which changes the relative speed of dark matter particles. We go into this in some detail in the paper, since we see in some of our simulations that the presence of baryons causes structures in the velocity of dark matter, so in some of our simulated galaxies, the velocity of dark matter near the Earth have behavior you’d miss if you just looked at the velocity in the Galactic rest frame.

From this, Jonathan could then calculate the expected rate of dark matter scattering in direct detection experiments. We considered three: LUX, CoGeNT, and DAMA/Libra. LUX is the most sensitive dark matter experiment to date. It also is very dependent on the high-speed dark matter, as it is made of xenon which is a relatively heavy nuclei. Thus, dark matter needs to be moving faster (compared to other experiments) in order to impart a big enough kick to move xenon nuclei enough to be seen.

CoGeNT and DAMA/Libra both have claimed positive signals which could be interpreted as dark matter. The problem is that LUX (and other experiments before LUX took the crown of “best direct detection experiment”) don’t see these signals. You can try to come up with various ways to avoid LUX seeing dark matter, and one possible way that was floated was for the high velocity tail of the dark matter distribution to be absent. That would suppress LUX’s signal and maybe not change DAMA/Libra and CoGeNT’s. That was a possibility anyway.

It turns out that we don’t find that. Our extrapolated direct detection bounds look like this. The vertical axis is the scattering cross section: higher is more scattering. The dark matter mass is the horizontal axis. The real upper limit from the LUX experiment is the solid back line that swoops down as the mass increases (low mass dark matter is hard to see, since it lacks enough energy to leave a signal). The real CoGoNT best fit region for their claimed signal is the black blob near 10 GeV, and DAMA/Libra is near 20 GeV.

Extrapolated direct detection limits for dark matter only (red, lefT) and Baryonic Simulations (Blue, right).

The dark matter-only velocity distributions lead to the limits down in red on the left. The baryons result in the limits in blue on the right. What we find is that things shift around, and that LUX is a weaker limit in a realistic dark matter velocity distribution than in the Standard Halo Model, but that they don’t shift around enough to let CoGeNT be seeing dark matter and LUX not see anything.

However, that’s ok. As a theorist, I want to be able to extract limits from one experiment and use them elsewhere. The velocity distribution is a source of uncertainty that prevents me from doing this with confidence. We only have four galaxies simulated, so we’re not nailing down this distribution perfectly yet, but it’s a start.

In addition, we saw that our galaxies have a lot more “spread” in their behavior and properties when compared to what is usually found in dark matter-only simulations. This opens a lot of possibilities in exploring what a Milky Way-like galaxy can look like, and maybe we need to develop new ways to compare our simulated galaxy with the real thing if we want to know for certain what the dark matter in our galaxy is doing, not just what an average dark matter particle in an average Milky Way-like galaxy is up to.